配置Spark日志和History Server

配置Spark日志

最近在折腾Spark,发现当Spark运行在Yarn模式下的时候(Spark1.6.2, Hadoop2.7.1),application中的控制台System.out输出或者Logger日志输出并不会记录到Yarn的执行节点的日志当中,所以在Yarn的UI或者用yarn logs -applicationId <app ID>命令,都只能追踪到该application在Yarn上执行和停止的过程,并不会追踪到application本身的输出。

如果要获取application的输出,只能通过Spark自己的日志来实现,官方文档是这么说的:

To use a custom log4j configuration for the application master or executors, here are the options:

- upload a custom log4j.properties using spark-submit, by adding it to the –files list of files to be uploaded with the application.

- add -Dlog4j.configuration = < location of configuration file > to spark.driver.extraJavaOptions (for the driver) or spark.executor.extraJavaOptions (for executors). Note that if using a file, the file: protocol should be explicitly provided, and the file needs to exist locally on all the nodes.

- update the $SPARK_CONF_DIR/log4j.properties file and it will be automatically uploaded along with the other configurations. Note that other 2 options has higher priority than this option if multiple options are specified.

Note that for the first option, both executors and the application master will share the same log4j configuration, which may cause issues when they run on the same node (e.g. trying to write to the same log file).

即可以通过三种方式配置driver或executor的日志:

- 在提交application的时候配置

--file参数,上传log4j的配置文件 - 在提交application的配置中设定log4j文件的位置

- 修改conf路径下的统一log4j配置文件,该文件会在提交application的时候自动上传

下面用第二种方式做一下示例:

- 在*$SPARK_CONF_DIR*下面的

spark-defaults.conf文件中添加如下信息:这样application的日志都会默认采用log4j-driver和log4j-executor的配置,当然如果需要为application配置特定的日志,可以在提交的1

2spark.driver.extraJavaOptions -Dlog4j.configuration=file:/opt/spark/current/conf/log4j-driver.properties

spark.executor.extraJavaOptions -Dlog4j.configuration=file:/opt/spark/current/conf/log4j-executor.properties--conf参数中设定并覆盖spark-defaults.conf的配置。 - 在driver节点上创建log4j-driver.properties,在执行节点上创建log4j-executor.properties,如果driver节点同时也是Yarn的执行节点,则需要同时创建两个配置文件,同时注意在这两个配置文件中,

log4j.appender.file.file不要配置成一样的,否则会混淆。

这是一个log4j-driver.properties的示例:1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21# Set everything to be logged to the console

log4j.rootCategory=INFO, console, file

log4j.appender.console=org.apache.log4j.ConsoleAppender

log4j.appender.console.target=System.err

log4j.appender.console.layout=org.apache.log4j.PatternLayout

log4j.appender.console.layout.ConversionPattern=%d{yy/MM/dd HH:mm:ss} %p %c{1}: %m%n

# Set log file

log4j.appender.file=org.apache.log4j.DailyRollingFileAppender

log4j.appender.file.append=true

log4j.appender.file.file=/opt/spark/current/logs/spark-driver.log

log4j.appender.file.layout=org.apache.log4j.PatternLayout

log4j.appender.file.layout.ConversionPattern=%d{yy/MM/dd HH:mm:ss} %p %c{1}: %m%n

# Settings to quiet third party logs that are too verbose

log4j.logger.org.spark-project.jetty=WARN

log4j.logger.org.spark-project.jetty.util.component.AbstractLifeCycle=ERROR

log4j.logger.org.apache.spark.repl.SparkIMain$exprTyper=INFO

log4j.logger.org.apache.spark.repl.SparkILoop$SparkILoopInterpreter=INFO

log4j.logger.org.apache.parquet=ERROR

log4j.logger.parquet=ERROR - 提交application,然后查看log4j的日志文件

配置Spark History Server

只有日志文件往往是不够的,有时候我们要查看历史记录,这就需要在driver节点启动History Server

- 在*$SPARK_CONF_DIR*下面的

spark-defaults.conf文件中添加EventLog和History Server的配置这里注意要创建/opt/spark/current/spark-events路径,application的执行历史才会保存到该路径。1

2

3

4

5

6# EventLog

spark.eventLog.enabled true

spark.eventLog.dir file:///opt/spark/current/spark-events

# History Server

spark.history.provider org.apache.spark.deploy.history.FsHistoryProvider

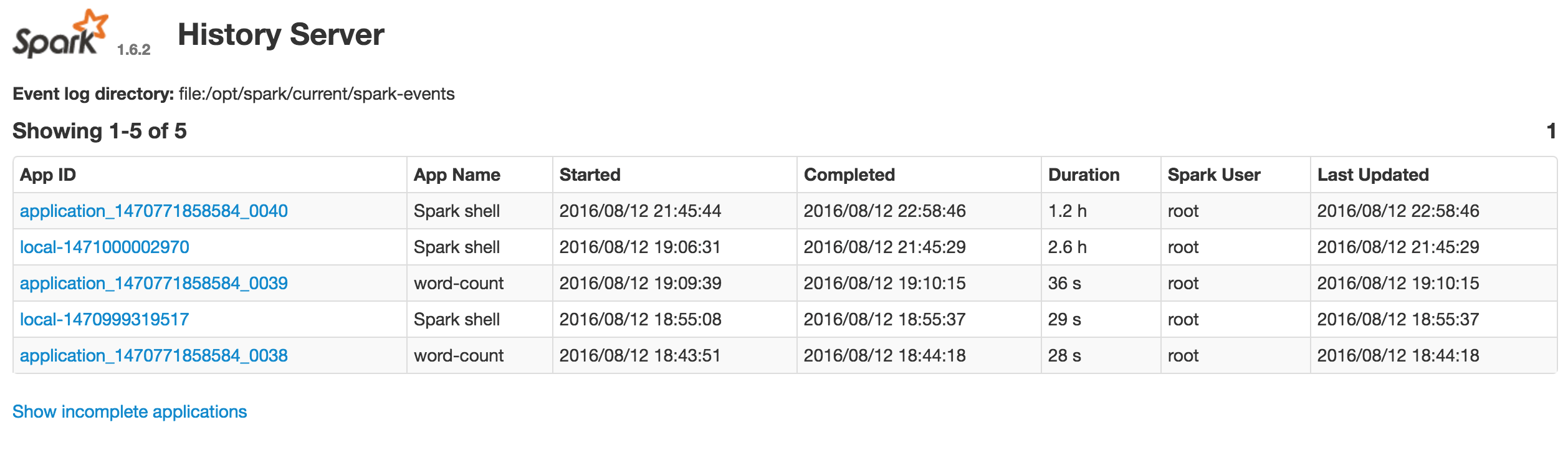

spark.history.fs.logDirectory file:/opt/spark/current/spark-events - 执行启动命令启动成功后可以访问driver节点的18080端口,查看历史记录

1

./sbin/start-history-server.sh

spark.history.provider目前官方只提供了org.apache.spark.deploy.history.FsHistoryProvider这一种实现,可以支持将EventLog保存到文件系统或者Hdfs中。

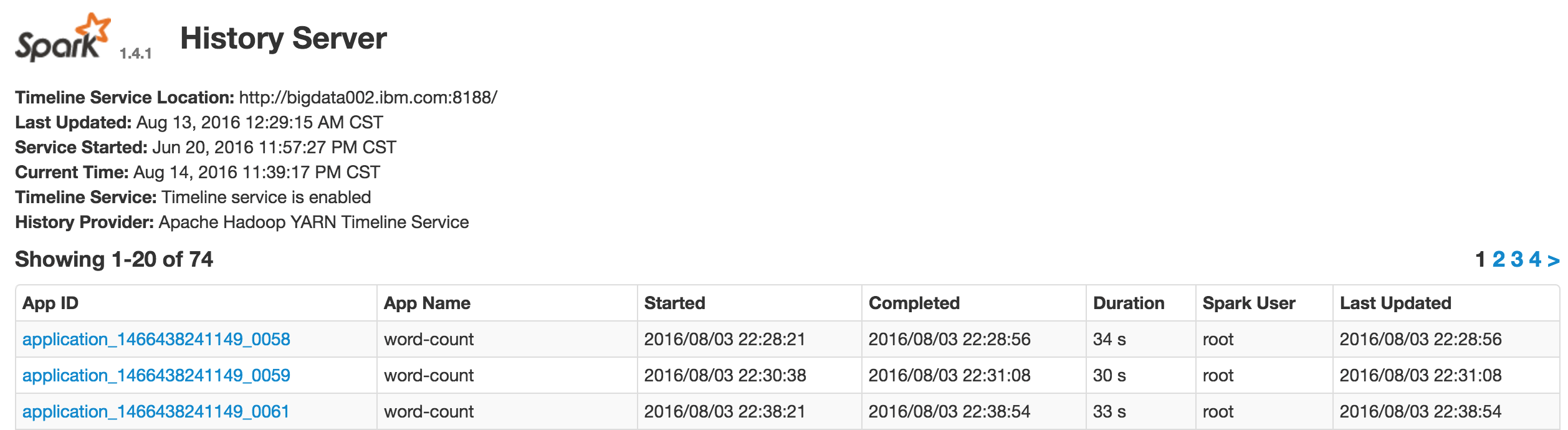

此外,在Hortonworks的HDP-2.4.0.0以下的版本中,自带的Spark提供了一个YarnHistoryProvider的实现,可以和Yarn的History Server自动集成,无需再配置Eventlog,仅需配置spark.history.provider和spark.yarn.services:

1 | spark.history.provider org.apache.spark.deploy.yarn.history.YarnHistoryProvider |

UI上有一些区别:

HDP-2.4.0.0及以上版本由于兼容性的问题,暂时不再提供实现。有兴趣的朋友可以下载点击这里查看源码,自己编译测试。